Gain Deeper Cost Visibility and Optimize Your Amazon S3 Storage At Scale with Amazon S3 Storage Lens

As data lakes, analytics pipelines and AI workloads continue to grow in scale, the volume of data stored in Amazon Simple Storage Service (Amazon S3) can expand rapidly. While S3 provides unmatched durability, scalability and availability, it’s easy for costs to rise unexpectedly if storage is not actively managed. Unused versions of objects, incomplete multipart uploads and a lack of lifecycle management rules can all contribute to unnecessary spend.

To help customers better understand, monitor and optimize their storage usage, Amazon S3 Storage Lens provides organization-wide visibility into S3 usage and activity trends, along with advanced metrics for cost optimization and data protection. In this post, we’ll explore how you can use S3 Storage Lens to track cost optimization metrics across your AWS Organization, identify inefficient storage patterns and define lifecycle policies that automatically reduce costs - all while maintaining data durability and compliance.

Understand Your Storage with S3 Storage Lens Metrics

Amazon S3 Storage Lens delivers a rich set of metrics and insights about your storage usage and activity, aggregated across your entire organization or specific accounts and buckets. It provides both free metrics (which are automatically available) and advanced metrics and recommendations, which include deeper insights into cost optimization and data protection.

Within the context of cost optimization, S3 Storage Lens exposes several key metrics, including CurrentVersionStorageBytes, NonCurrentVersionStorageBytes, DeleteMarkerObjectCount, IncompleteMultipartUploadStorageBytes and others - that help you understand how efficiently your data is stored and where potential cost savings can be achieved [1]. The CurrentVersionStorageBytes metric represents the number of bytes that are the active, current versions of your objects - in other words, the data you’re primarily using. In contrast, NonCurrentVersionStorageBytes represents the storage consumed by previous versions of objects that might be no longer actively used by your applications or workflows, yet continue to incur the same storage costs as current data. A high ratio of noncurrent version bytes to total storage can indicate opportunities to enable or refine version transition and expiration policies that allow you to migrate infrequently accessed data to a colder and less expensive storage type or completely delete it.

Similarly, DeleteMarkerObjectCount and DeleteMarkerStorageBytes help identify when many deleted object markers remain in versioned buckets : a common source of hidden cost in long-lived data lakes. Over time, delete markers and noncurrent versions can accumulate, resulting in higher storage charges without delivering any additional business value.

Another valuable metric is IncompleteMultipartUploadStorageBytes, which describes storage consumed by multipart uploads that were started but never completed. In environments with frequent data ingestion and transformation pipelines, incomplete uploads can persist forever unless lifecycle rules are configured to clean them. According to AWS re:Post, “The parts remain in your Amazon S3 storage until the multipart upload completes or cancels. Amazon S3 stores the parts from the incomplete multipart uploads and charges your AWS account for the storage costs.” [2] .This can be a significant contributor to storage cost growth, especially in large-scale data lakes where uploads happen programmatically.

Turning Insight into Action with Lifecycle Policies

Once S3 Storage Lens identifies inefficiencies, the next step is to take action. Amazon S3 lifecycle policies support two types of actions - transition actions which allow you to automatically transition objects to more cost-effective storage classes and expiration actions which are used to delete objects when they’re no longer needed [3].

Transition lifecycle policies help you move infrequently accessed data from the S3 Standard storage class to lower-cost tiers like S3 Standard-IA or S3 Glacier Instant Retrieval after a certain amount of days. By combining transition rules with metrics like CurrentVersionStorageBytes and NonCurrentVersionStorageBytes, you can ensure that only the data you actively use and need remains in high-performance tiers, while cold data is automatically moved to cheaper alternatives.

Expiration lifecycle policies, on the other hand, help clean up old versions of objects and remove unnecessary delete markers. For example, if Storage Lens shows a high DeleteMarkerObjectCount or NonCurrentVersionObjectCount, you can define expiration rules to permanently delete noncurrent versions or remove expired object delete markers after a certain number of days. This keeps your storage more lean and predictable, without manual intervention.

For multipart uploads, Storage Lens metrics such as IncompleteMultipartUploadObjectCount and IncompleteMPUObjectCountOlderThan7Days can reveal whether large amounts of partially uploaded data remain. Lifecycle rules can be configured to automatically delete incomplete multipart uploads older than seven days which ensures that unfinished uploads don’t silently contribute to cost [4].

The following example lifecycle policy creates rules that automatically move and delete data in your Amazon S3 bucket to help manage storage costs over time. It transitions current object versions to the S3 Standard-IA storage class after 30 days, then to S3 Glacier after 90 days and deletes them after one year. For versioned buckets, it moves older (noncurrent) versions of objects to S3 Glacier 30 days after they become noncurrent and deletes them after 180 days. The policy also cleans up incomplete multipart uploads that are more than seven days old and removes expired delete markers to keep your storage clean and cost-efficient. This type of lifecycle policy is well suited for use cases like data lakes, log archives or temporary backup storage, where objects are rarely accessed after being uploaded and can safely be archived or deleted after a defined period.

{

"Rules": [

{

"ID": "TransitionCurrentVersions",

"Filter": {

"Prefix": ""

},

"Status": "Enabled",

"Transitions": [

{

"Days": 30,

"StorageClass": "STANDARD_IA"

},

{

"Days": 90,

"StorageClass": "GLACIER"

}

],

"Expiration": {

"Days": 365

}

},

{

"ID": "ManageNonCurrentVersions",

"Status": "Enabled",

"NoncurrentVersionTransitions": [

{

"NoncurrentDays": 30,

"StorageClass": "GLACIER"

}

],

"NoncurrentVersionExpiration": {

"NoncurrentDays": 180

},

"AbortIncompleteMultipartUpload": {

"DaysAfterInitiation": 7

},

"Filter": {

"Prefix": ""

}

},

{

"ID": "RemoveExpiredDeleteMarkers",

"Status": "Enabled",

"Filter": {

"Prefix": ""

},

"Expiration": {

"ExpiredObjectDeleteMarker": true

}

}

]

}

Managing Optimization Across Your Organization

In multi-account environments, manually monitoring and cost-optimizing S3 usage in each account can be time-consuming and error-prone. S3 Storage Lens supports organization-wide dashboards through AWS Organizations, enabling centralized visibility across all accounts. The service allows you to aggregate data for an Organization, specific accounts, regions, buckets and even prefixes. As a prerequisite, it is required to enable S3 Storage Lens trusted access from the management account as well as assign delegated administrator accounts which are “used to create S3 Storage Lens configurations and dashboards that collect organization-wide storage metrics and user data” [5]. With this setup, you can aggregate key cost optimization metrics, identify outliers and apply consistent lifecycle policies across all of your environments.

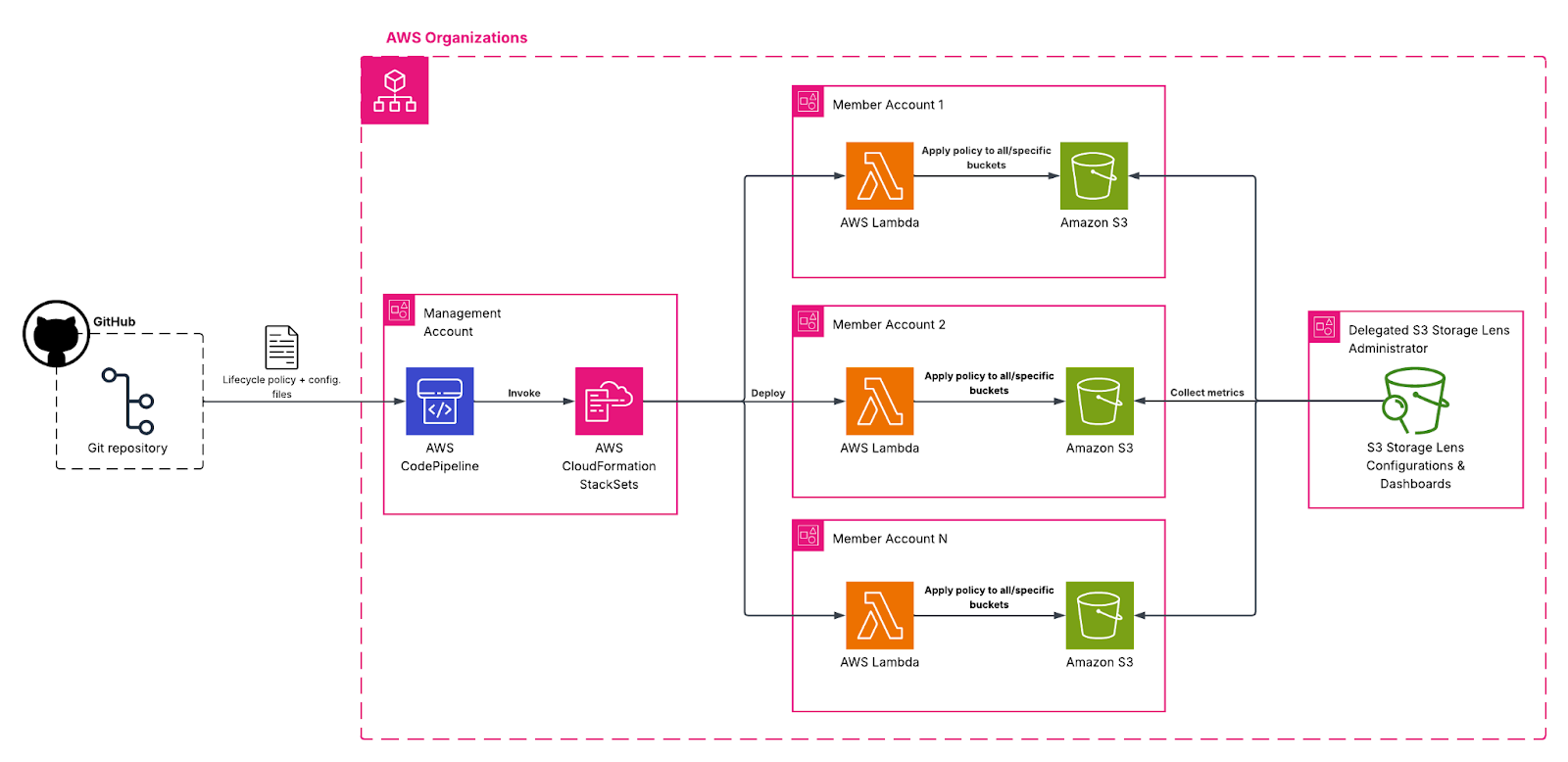

To continuously manage and deploy lifecycle policies across your entire organization, you can combine GitHub, AWS CodePipeline and AWS CloudFormation StackSets with a Lambda-backed custom resource. In this setup, your GitHub repository serves as the single source of truth, storing one or more lifecycle policy files (such as lifecycle.json) and optional configuration files that define which accounts or buckets the policies should apply to. When a pull request is approved, CodePipeline automatically retrieves the latest version of these files, packages them as an artifact and stores them in a central S3 artifact bucket. The pipeline then triggers a CloudFormation StackSet that deploys a Lambda function into each target account. This Lambda has permissions to read from the central artifact bucket and to apply or remove lifecycle configurations within its own account. Once deployed, the Lambda downloads the lifecycle policy and configuration files from the artifact bucket, determines which buckets are in scope and applies the defined lifecycle rules - transitioning data, expiring old versions or cleaning up incomplete uploads as specified. This approach provides a simple and automated way to deliver consistent lifecycle management across all accounts, ensuring that your S3 storage remains optimized and cost-effective as your environments change.

Additionally, if you want to ensure that no manual changes are being made to the lifecycle configuration of your S3 buckets in the member accounts, you can use AWS Organizations Service Control Policies (SCPs) to restrict the relevant API calls. By creating an SCP that denies s3:PutBucketLifecycleConfiguration and s3:DeleteBucketLifecycle for all principals except the Lambda’s execution role for example, you can prevent users or other roles in the member accounts from manually modifying or deleting lifecycle rules.

The following diagram shows an architecture of a multi-account environment with S3 Storage Lens integration and continuous deployment of S3 bucket lifecycle policies.

Complementing S3 Storage Lens with AWS Trusted Advisor and AWS Config

While S3 Storage Lens provides detailed storage-level metrics, both AWS Trusted Advisor and AWS Config complement it by offering proactive checks, recommendations and governance for your S3 environment.

Trusted Advisor includes cost optimization checks for S3, such as identifying buckets with no lifecycle policies and buckets that do not have rules configured to delete incomplete multipart uploads after seven days [6]. These checks provide high-level guardrails to help prevent common misconfigurations and unnecessary storage costs.

AWS Config, on the other hand, enables continuous monitoring and evaluation of your S3 bucket configurations across accounts. You can use AWS managed rules, such as the s3-lifecycle-policy-check (which is also referenced by the Trusted Advisor check), to automatically detect buckets without lifecycle policy configurations. When a bucket is identified as noncompliant, a custom remediation action can be configured to automatically apply a predefined default lifecycle policy, ensuring consistent cost management across accounts. In addition, you can create custom Config rules using AWS Lambda to enforce more complex, organization-specific cost optimization logic. For example, a custom rule could be scheduled to execute periodically and flag buckets where the ratio of noncurrent version bytes to total storage exceeds a threshold, helping identify potential targets for lifecycle policy refinement. To manage these rules at scale across multiple accounts in an AWS Organization, you can leverage a Config aggregator, which provides a unified dashboard showing the compliance status of all your rules across accounts and regions [7]. Additionally, AWS Config conformance packs allow you to package and deploy a set of managed and custom rules consistently across multiple accounts and regions, ensuring organization-wide enforcement of storage best practices.

By combining Trusted Advisor’s automated recommendations, AWS Config’s continuous compliance checks and S3 Storage Lens’s granular metrics, you gain a comprehensive view of your S3 storage environment. This integrated approach helps detect inefficiencies, enforce best practices and remediate issues automatically, keeping your storage both cost-effective and well-managed.

What This Means for You

S3 Storage Lens and lifecycle policies provide organizations with a comprehensive framework for understanding and optimizing S3 storage costs. By monitoring metrics such as CurrentVersionStorageBytes, NonCurrentVersionStorageBytes, DeleteMarkerObjectCount and IncompleteMultipartUploadStorageBytes, you gain a clearer insight into how and where storage spend is accumulating. This visibility makes it easier to track usage trends, identify inefficiencies and implement strategies to optimize costs. Optionally, enabling AWS Trusted Advisor and AWS Config can further enhance visibility and compliance: Trusted Advisor provides automated cost optimization checks and recommendations, while AWS Config continuously monitors bucket configurations and enforces compliance through managed or custom rules. With lifecycle rules in place, you can automatically manage data : transitioning infrequently accessed objects, expiring outdated versions and cleaning up incomplete uploads, while ensuring your data remains protected and accessible according to your business requirements.

For organizations running large-scale data lakes or high-volume data workflows across multiple accounts, these practices are particularly valuable, as S3 costs can scale rapidly if left unmanaged. The combination of detailed visibility with automated action and compliance enforcement, allows you to create a foundation for cost-effective cloud storage that keeps up with growing data demands without sacrificing performance or availability.

Want to Learn More?

If you’re interested in exploring how this S3 cost optimization and lifecycle management architecture can be tailored to your organization’s specific needs, our AWS experts at Several Clouds are here to help. You can book a personalized session to discuss your storage environment, review your current practices and design a solution that balances cost efficiency and compliance. Whether you’re managing large-scale data lakes, multi-account environments or complex data workflows, we can help you implement a strategy that aligns with your business goals and maximizes the value of your S3 storage.

📅 Whether it’s GenAI, CI/CD, or cloud cost chaos — we’ve seen it before.

Let’s walk through how we’ve solved it, and see if it fits your world.

References

[1] “Amazon S3 Storage Lens metrics glossary”, AWS Docs, https://docs.aws.amazon.com/AmazonS3/latest/userguide/storage_lens_metrics_glossary.html

[2] “Delete incomplete multipart uploads in Amazon S3”, AWS re:Post, https://repost.aws/knowledge-center/s3-incomplete-multipart-uploads

[3] “Managing the lifecycle of objects”, AWS Docs,

https://docs.aws.amazon.com/AmazonS3/latest/userguide/object-lifecycle-mgmt.html

[4] “Configuring a bucket lifecycle configuration to delete incomplete multipart uploads”, AWS Docs,

https://docs.aws.amazon.com/AmazonS3/latest/userguide/mpu-abort-incomplete-mpu-lifecycle-config.html

[5] “Using Amazon S3 Storage Lens with AWS Organizations”, AWS Docs,

https://docs.aws.amazon.com/AmazonS3/latest/userguide/storage_lens_with_organizations.html

[6] “Trusted Advisor : Cost optimization”, AWS Docs,

[7] “Multi-Account Multi-Region Data Aggregation for AWS Config”, AWS Docs,

https://docs.aws.amazon.com/config/latest/developerguide/aggregate-data.html

Relevant Success Stories

Book a meeting

Ready to unlock more value from your cloud? Whether you're exploring a migration, optimizing costs, or building with AI—we're here to help. Book a free consultation with our team and let's find the right solution for your goals.

.png)

.png)